Blog > Emerging Trends > Why AI Will Never Replace Therapists

Why AI Will Never Replace Therapists

Explore why artificial intelligence, despite rapid advances, will never fully replace human therapists. Drawing on recent Stanford University research, this post highlights AI's critical limitations, including stigma expression, inability to manage crises effectively, and the lack of essential human attributes needed for true therapeutic relationships.

Last Updated: June 2, 2025

What You'll Learn

-

Why AI lacks the empathy, emotional intelligence, and human connection essential to effective therapy.

-

Specific ways in which AI chatbots fail in mental health care, including reinforcing harmful beliefs and failing to recognize crises.

-

Key findings from a Stanford University study that detail the limitations and risks of relying on AI for mental health treatment.

-

How therapists uniquely create therapeutic alliances, ensuring safe, ethical, and impactful mental health care that AI cannot replicate.

Artificial Intelligence (AI) is making remarkable strides in a number of fields, including healthcare to hospitality services. We as humans are a little threatened by all the things that AI can do and are naturally curious whether artificial intelligence will one day replace us in mental health professionals.

While AI has many strengths, it can never replicate or replace the human element in the therapeutic process. In this blog, we'll explore why AI will never replace therapists and delve further into the indispensable role therapists, psychiatrists and all mental health professionals have in the betterment of individuals' mental health.

The Role of Chatbots in Therapy

The rise of AI therapists like Woebot and Wysa has sparked controversy among mental health professionals, and for good reason. Some see these bots as a solution to the nationwide clinician shortage, while others worry they could spell the end of traditional psychotherapy.

Can AI Be Used as a Therapist?

In theory, a therapy chatbot can act as a virtual therapist by conversing with patients and asking them questions about their experiences and feelings. They can even provide recommendations for exercises patients can do outside of the conversation.

On the one hand, these applications could fill a very real void for those who lack access to quality psychological care. On the other, though, they can't provide the same kind of connection a human therapist can — and when the U.S. Surgeon General declares loneliness a public health crisis, it becomes clear that chatting with a computer won't meet that need.

The Impact of AI on the Profession of Therapy

Although AI cannot replicate a live therapist, it does have some strengths that help enhance their practices. One of AI's biggest advantages is its ability to rapidly analyze massive quantities of data, which lets it identify patterns humans might miss. Therapists can use this capability to empower their practice in the following ways:

- Analyzing patient speech and writing for signs of distress using natural language processing (NLP)

- Helping psychiatrists select the right medications based on patient data

- Identifying potential areas of improvement for trainees and students

- Directing patients to crisis resources outside office hours

- Evaluating therapist performance and providing recommendations for future sessions

- Translating conversations in real-time to provide aid to people who speak English as a second language

Ultimately, the key is to find ways this technology can improve therapeutic techniques instead of taking the therapist out of the picture completely.

Thinking about using AI in your practice?

Download this checklist first! Before you inroduce AI tools into your clinical workflow, make sure they are safe, ethical, and compliant.

✅ Protect client privacy

✅ Avoid clinical risk

✅ Ensure AI supports, not replaces, your judgment.

Download this must-have checklist built to help you evaluate AI tools with confidence and care.

The Limitations of AI in Understanding Human Emotions

While AI chatbots can use language that sounds empathetic, they are still machines — they literally cannot put themselves in other people's shoes, which is critical for understanding where a patient is coming from. This lack can cause the AI to generate responses that don't align with the patient's needs, instead pushing for treatments that the patient isn't yet ready for.

Why AI Can't Mimic the Empathy of Therapists

At the heart of therapeutic practice, regardless of the mental health professional, lies the profound significance of the emotional connection between the practitioner and their clients. Mental health professionals like therapists bring to the table an abundance of authentic empathy, unwavering compassion and profound understanding that extends beyond mere data analysis.

Although AI systems can attempt to simulate empathy, they ultimately fail to replicate the genuine emotional comprehension that grows out of shared human experiences nurtured by compassionate therapists and mental health providers. AI will not be able to have individuals reveal their deepest emotions, fears and vulnerabilities in a safe therapeutic space. AI will never be able to give a hug, share tears or hold the hand of a client in need.

The Inability of AI to Understand Human Nuance

Most generative AI programs rely on existing data sets, which can cause them to miss subtle details about a patient's personality or condition. For example, while a chatbot could be useful in providing crisis care outside of regular work hours, most bots aren't sophisticated enough to properly identify when a patient is in crisis.

Here's another example. Recently, the National Eating Disorder Association (NEDA) attempted to replace its prevention helpline with Tessa, an AI chatbot designed to aid people struggling with eating disorders. Instead of providing users with helpful resources, Tessa dispensed triggering weight loss advice that could have had serious consequences.

Nonverbal Communication and Body Language

Nonverbal communication — body language, tone of voice, and facial expressions — makes up 93% of human interaction, according to a groundbreaking study by Albert Mehrabian. Professionals in the mental health field are trained to spot these subtle cues, which can greatly assist in assessing a client's emotional state. The lack of ability to comprehend nonverbal cues makes AI ineffective.

The Importance of the Human Experience in Therapy

Real psychotherapy relies on human interaction. The therapist uses their skills to gain a complete understanding of what the patient is going through, while the patient benefits from the therapist's support and questioning.

Many AI chatbots are capable of sustaining a long conversation and asking relevant follow-up questions, but they can't really understand what a patient is talking about. While that's enough for people who just want to be heard, anyone seeking real clinical treatment is at a disadvantage.

The Therapist-Patient Relationship: A Barrier for AI

There is substantial evidence that a strong relationship between the patient and therapist is a critical component of effective treatment.

Because it's a machine, an AI therapist can't bond with a patient the same way a human might. The patient might feel trust toward the AI, but at the end of the day, the interaction is completely one-sided — which is detrimental to real growth.

Personalized and Tailored Treatment

Individuals are unique, and their therapy needs are equally diverse. In order to meet the specific needs of each client, therapists adapt their approach, giving the client what they need in order to better their mental health. They consider the client's background, experiences and emotions, creating a personalized treatment plan.

As opposed to human emotions and experiences, artificial intelligence relies on algorithms and data patterns. These analyses can generate valuable insights, but you need human creativity to adapt those insights into a real-life plan.

Dynamic Adaptation

As clients progress through treatment, their needs naturally change — that's why therapists adapt their approach over time. They can change strategies, interventions and techniques to suit the evolving situation.

Most AI systems use predefined algorithms and responses, thereby lacking the flexibility and intuition of human therapists.

The Ethical Implications of AI in Therapy

Like any new therapeutic technique, AI therapy brings with it a host of ethical and moral questions. What is the technology's potential for harm? Do therapist AI tools consistently adhere to HIPAA and other healthcare quality and privacy standards? There still isn't enough data to say for certain.

Although the FDA has designated patient-facing mental health tech tools like AI chatbots as “low-risk,” AI has a long way to go before it can provide the kind of ethical treatment patients need.

Bias and Discrimination

There's also a real concern about AI showing bias toward certain groups due to neglect on the part of the developers. Failure to train AI on diverse data sets can result in an algorithm that excludes marginalized racial, sexual and religious groups — which can potentially cause more harm than good when interacting with those populations.

For example, implicit discrimination is one of the biggest barriers marginalized groups face when seeking mental health treatments. Providers who fail to account for intersectionality and intergenerational trauma can accidentally subject their patients to microaggressions and harmful stereotypes, which can interfere with treatment. However, with proper education and training, the human therapist can realize when they've made a mistake and apologize.

Take that situation and replace the human therapist with an unfeeling bot that only has biased data to inform its responses. While a human therapist has the potential to do harm, a bot can't really apologize for its actions, which can further alienate the patient.

Lack of Ethical and Moral Judgment

The mental health field is not black or white but rather filled with gray areas. AI does not understand gray, it is only logical, not emotional. Clients often seek therapy to navigate complex moral and ethical dilemmas. As mental health professionals, we provide guidance based on our professional training and ethical principles. When dealing with sensitive matters, AI lacks the moral and ethical judgment humans possess.

Inability to Build Therapeutic Rapport

Trust and rapport are essential to a working therapeutic relationship between mental health professionals and their clients. This bond of trust is built on a foundation of reliability, consistency, and unwavering commitment to the client's well-being — and it can take weeks, months or even years for that foundation to develop. Clients can confide in their therapists without the fear of judgment or breach of confidentiality, knowing that their best interests are at the forefront of the therapeutic journey.

When a client fully trusts their clinician and builds excellent rapport, they can be completely open and vulnerable with them. AI, being algorithm-based, doesn't possess the capacity to develop this kind of trust and rapport, and it lacks the depth of human connection or ability to offer a comforting presence.

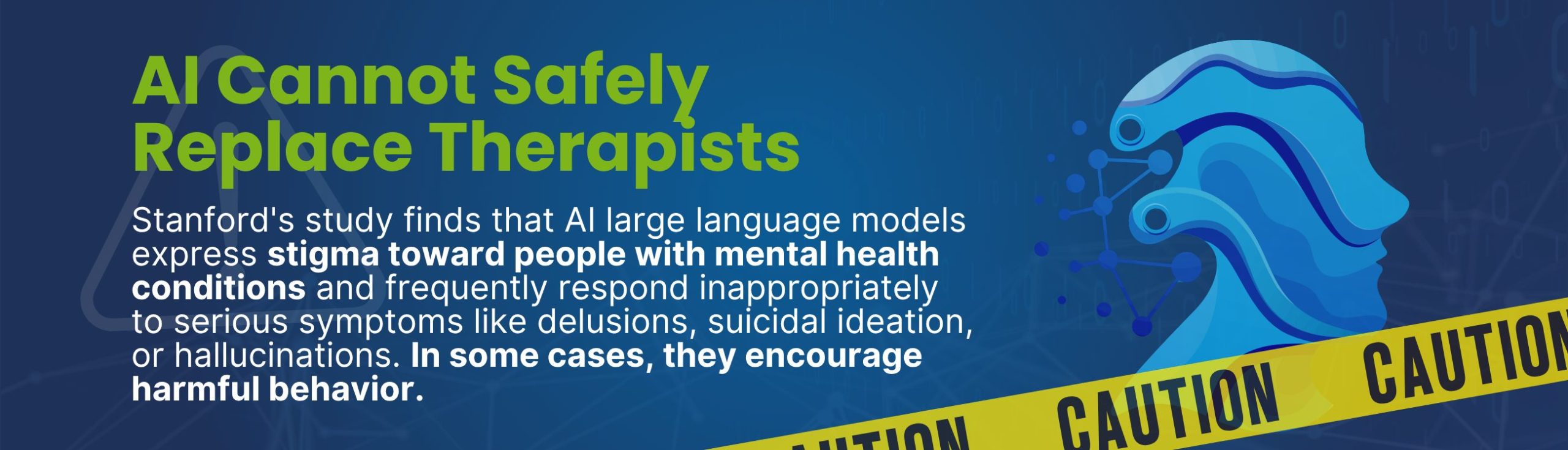

Recent Stanford Study Identifies AI Chatbot Limitations for Mental Health

Recent research from Stanford University highlights critical limitations of AI-powered chatbots in mental health contexts. In their comprehensive evaluation, Stanford researchers found that Large Language Models (LLMs), including advanced chatbots like GPT-4o, failed to safely and ethically replicate essential aspects of therapeutic relationships. Specifically, these AI systems demonstrated significant shortcomings by:

-

Expressing stigma toward individuals with mental health conditions, thereby potentially exacerbating clients' struggles with acceptance and seeking care.

-

Responding inappropriately to critical situations, such as encouraging delusional thinking due to their inherent tendency toward sycophancy—agreeing excessively with users rather than constructively challenging harmful beliefs.

-

Failing to recognize mental health crises, notably suicidal ideation, and providing dangerous information or suggestions rather than urgently necessary interventions.

The Stanford researchers emphasize that a foundational component of therapy—the therapeutic alliance—depends heavily on human attributes such as identity, empathy, and genuine emotional engagement. These are qualities that current LLMs fundamentally lack, underscoring that these systems cannot fully replicate the nuanced, trust-based interactions therapists foster with their clients.

Given these practical and foundational limitations, the study concludes decisively that AI chatbots, regardless of their sophistication, are inappropriate replacements for qualified mental health providers. Instead, AI could serve beneficial supporting roles, such as administrative assistance or therapist training, but the nuanced human dynamics essential to effective therapy remain irreplaceable.

This study reinforces the stance that human therapists provide irreplaceable emotional intelligence, adaptive judgment, and authentic relational connection that AI, by its very nature, cannot replicate.

Final Thoughts: Will AI Replace Therapists?

While AI advancements offer intriguing possibilities for enhancing mental health care, recent Stanford research underscores the clear and fundamental limits of AI in replacing human therapists. Clinicians should thoughtfully consider the role of AI in their practices, embracing tools that amplify their therapeutic abilities rather than seeking to substitute them entirely.

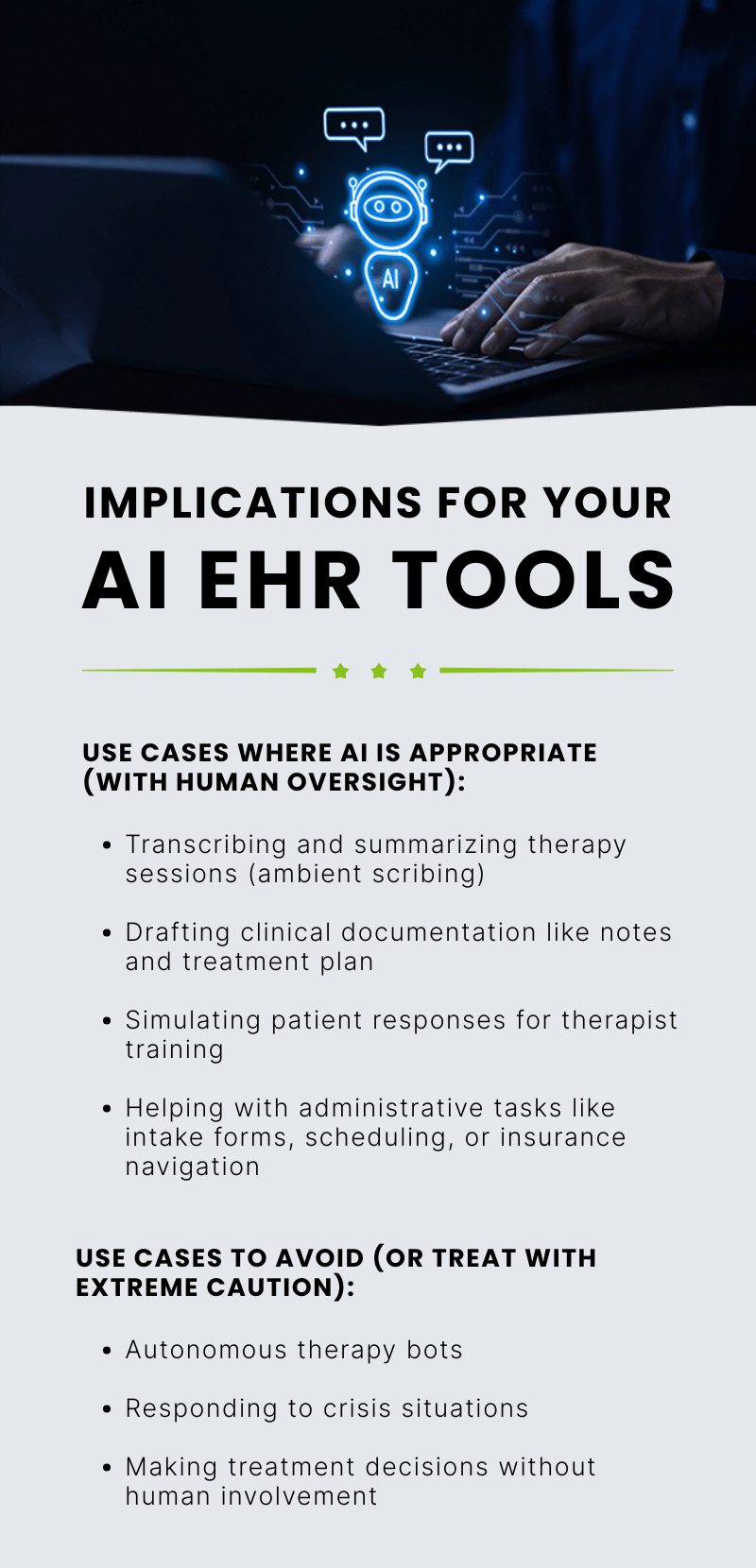

Implications for Your AI EHR Tools:

When thoughtfully incorporated with proper oversight, AI can significantly streamline your practice in beneficial ways:

Use Cases Where AI Is Appropriate (with human oversight):

-

Transcribing and summarizing therapy sessions (ambient scribing)

AI tools can capture session notes efficiently, allowing you to focus fully on the patient without distraction. -

Drafting clinical documentation like notes and treatment plans

Leveraging AI for initial documentation drafts can enhance accuracy and consistency, saving valuable clinician time. -

Simulating patient responses for therapist training

AI simulations can help clinicians develop their skills by providing realistic, interactive training scenarios. -

Helping with administrative tasks like intake forms, scheduling, or insurance navigation

Automating routine administrative processes reduces your workload, letting you concentrate more on patient care.

Use Cases to Avoid (or Treat with Extreme Caution):

-

Autonomous therapy bots

Due to significant ethical and safety risks, AI should never independently manage therapeutic conversations or relationships. -

Responding to crisis situations

Human judgment is irreplaceable when responding to critical mental health emergencies such as suicidal ideation or psychosis. -

Making treatment decisions without human involvement

Clinical decisions must remain clinician-driven; AI insights should inform, not dictate, care plans.

By understanding and respecting these clear boundaries, clinicians can use AI responsibly, optimizing both their practice efficiency and the quality of patient care—without compromising safety or the essential human touch at the heart of therapy.

ICANotes Position on AI Technology

At ICANotes, we believe technology should support—not substitute—the clinical expertise and therapeutic relationships that define quality mental health care. That’s why our approach to incorporating AI into our EHR platform is centered on augmenting, not replacing, clinicians. We focus our development efforts on tools that reduce administrative burden—such as AI-assisted documentation and workflow automation—while leaving all clinical decision-making in the hands of licensed providers.

All client-facing AI features in ICANotes will include human-in-the-loop safeguards to ensure safety, appropriateness, and professional oversight. We’re committed to transparency in communicating the boundaries of AI capabilities to both providers and patients. By grounding our AI strategy in clinical responsibility and ethical clarity, ICANotes ensures that technology enhances—never undermines—the heart of mental health care.

At ICANotes, our mission is to help mental health professionals deliver the highest-quality care. That's why we designed an EHR solution specifically for the behavioral health field — you can record better notes in less time so you can put all your focus into talking with your patient.

Start your free trial today -- no credit card required! Or book a demo with one of our product specialists.

Start Your 30-Day Free Trial

Experience the most intuitive, clinically robust EHR designed for behavioral health professionals, built to streamline documentation, improve compliance, and enhance patient care.

- Complete Notes in Minutes - Purpose-built for behavioral health charting

- Always Audit-Ready – Structured documentation that meets payer requirements

- Keep Your Schedule Full – Automated reminders reduce costly no-shows

- Engage Clients Seamlessly – Secure portal for forms, messages, and payments

- HIPAA-Compliant Telehealth built into your workflow

Complete Notes in Minutes – Purpose-built for behavioral health charting

Always Audit-Ready – Structured documentation that meets payer requirements

Keep Your Schedule Full – Automated reminders reduce costly no-shows

Engage Clients Seamlessly – Secure portal for forms, messages, and payments

HIPAA-Compliant Telehealth built into your workflow

Dr. October Boyles is a behavioral health expert and clinical leader with extensive expertise in nursing, compliance, and healthcare operations. With a Doctor of Nursing Practice (DNP) from Aspen University and advanced degrees in nursing, she specializes in evidence-based practices, EHR optimization, and improving outcomes in behavioral health settings. Dr. Boyles is passionate about empowering clinicians with the tools and strategies needed to deliver high-quality, patient-centered care.